Decoding Under-Resolved Observations of Turbulence:

Measurements-Infused Simulations

Tamer Zaki

Department of Mechanical Engineering

Johns Hopkins University

U.S.A.

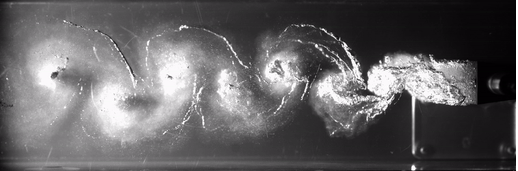

High-fidelity simulations of turbulence provide non-intrusive access to all the resolved flow scales and any quantity of interest. However, simulations often invoke idealizations that compromise realism (e.g. truncated domains and modelled boundary conditions). Experiments, on the other hand, examine the true flow with less idealizations, but they continually contend with limited spatio-temporal sensor resolution and the challenge of directly measuring quantities of interest. By assimilating observations, however scarce, in simulations, we can leverage the advantages of both approaches and mitigate their respective deficiencies. The simulations thus achieve higher level of realism by tracking the true flow state, and we can probe any flow quantity of interest at higher resolution than the original measurements. The data-assimilation problem is formulated as a nonlinear optimization, where we seek the flow field that satisfies the Navier-Stokes equations and optimally reproduces available data. In this framework, measurements are no longer mere records of instantaneous, local flow quantities, but rather an encoding of the antecedent flow events that we decode using the governing equations. Chaos plays a central role in obfuscating the interpretation of the data. Measurements that are infinitesimally close may be due to entirely different earlier conditions—a dual to the famous butterfly effect. We will examine several data-assimilation problems in wall turbulence and establish the minimum resolution of measurements for which we can accurately reconstruct all the missing flow scales. We will highlight the roles of the Taylor microscale and the Lyapunov timescale and discuss the fundamental difficulties of predicting turbulence from limited observations.